About the client!

The client is a large government-funded hospital and medical research institute. The client is currently running and collaborating with several R&D programs around the globe that require access to large amounts of data. They are also running many IoT and data science-focused programs that need to iterate quickly without managing complex infrastructure to support each program.

The client had very specific requirements that would benefit greatly from a cloud deployment. The main focus was around:

- Archiving and making large amounts of real-time as well as archival data more available to labs, universities, and research centers around the world.

- Supporting innovation programs exploring use cases in IoT, Artificial Intelligence/Machine Learning, and Data Science, allowing them to quickly iterate and deploy POCs.

The data explosion!

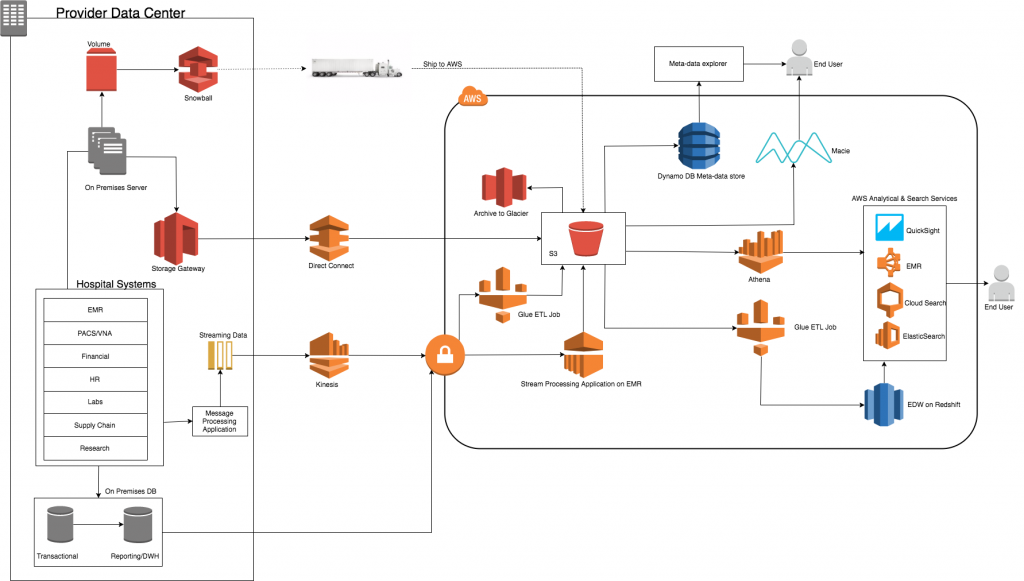

Client data is coming in from many sources:

- Genomic sequencers

- Devices such as MRIs, x-rays and ultrasounds

- Sensors and wearables for patients

- Medical equipment telemetry

- Mobile applications

As well as non clinical sources:

- Human resources

- Finance

- Supply chain

- Claims and billing

Data from these sources can be structured as well as unstructured. Some data comes across in streams, such as that taken from patient monitors, while some comes in batch form. Still, other data comes in near-real time, such as HL7 messages. All of this data has retention policies dictating how long it must be stored. Much of this data is stored indefinitely, as many systems in use today have no purge mechanism. AWS has services to manage all these data types, as well as their retention, security, and access policies.

The main concern

The hospital is currently hosting 400+ petabytes of data and generates a large amount of data daily. Transferring all this data to the cloud for the solution to be effective is a significant challenge. Using the fastest available internet connection, it would take them a minimum of 12 months to migrate all data to the cloud. Data security and HIPAA compliance are significant considerations.

Solution

AWS Snowmobile.

Pictured above, it is a self-contained data storage unit housed in a shipping container with a 100-petabyte capacity each. Two of the Snowmobile units will bring the data migration time down to a few days.

AWS Snowball Edge

AWS Snowball Edge

AWS Snowball and AWS Snowmobile are appropriate for extremely large, secure data transfers, whether one-time or episodic. AWS Glue is a fully-managed ETL service for securely moving data from on-premises to AWS, and Amazon Kinesis can be used for ingesting streaming data.

Amazon S3, Amazon S3 IA, and Amazon Glacier are economical data storage services with a pay-as-you-go pricing model that can expand (or shrink) with the customer’s requirements.

.

.

.

Architecture

Ref: https://aws.amazon.com/blogs/architecture/store-protect-optimize-your-healthcare-data-with-aws/

AWS Snowball Edge

AWS Snowball Edge